Today you can actually build a voice assistant for your website without too much time or effort. Just some gumption and a little know how. Chatbot functionality and quality are growing rapidly these days, with a snow ball effect of rolling down hill. The popularity of chatbot widgets shows the value and expected user experience. We get used to self-service, but still want some personalized attention.

Mobile users are familiar with voice assistants recognizing speech commands, such as Siri, the Google Assistant, Alexa. With Web Speech API support in browsers, it is also possible to speak to browser-based bots directly even offline.

In this article, we will describe the creation of a chat working entirely in the web browser with a bot listening to your voice commands and speaking back to you by voice.

The browser for this demo has to support speech recognition and speech synthesis (currently they both work only in the Chromium-based browsers).

Part 1 - Installation

To build this demo, you need Node.js installed, see instruction on their site.

Create a directory for this application ("speakbot"). Then inside this new directory initialize the application with default settings:

npm init --yesand install dependencies:

npm i @nlpjs/core @nlpjs/lang-en-min @nlpjs/nlpTo generate the web bundle, you need two development libraries: browserify and terser.

npm i -D browserify terserOpen your package.json and add this into the scripts section:

"dist": "browserify ./lib.js | terser --compress --mangle > ./bundle.js"Part 2 - The AI

The "AI" part of our application will be NLP.js - a general natural language utility for Node.js development.

First, we need to build a bundle file, which contains the framework parts we are going to use. Create a file named "lib.js" with the following content:

const core = require("@nlpjs/core")

const nlp = require("@nlpjs/nlp")

const langenmin = require("@nlpjs/lang-en-min")

window.nlpjs = { ...core, ...nlp, ...langenmin }Second, compile the bundle:

npm run distThird, create a file named "index.js" with the following content:

const { containerBootstrap, Nlp, LangEn } = window.nlpjs

;(async () => {

const container = await containerBootstrap()

container.use(Nlp)

container.use(LangEn)

const nlp = container.get("nlp")

nlp.settings.autoSave = false

nlp.addLanguage("en")

// Adds the utterances and intents for the NLP

nlp.addDocument("en", "goodbye for now", "greetings.bye")

nlp.addDocument("en", "bye bye take care", "greetings.bye")

nlp.addDocument("en", "okay see you later", "greetings.bye")

nlp.addDocument("en", "bye for now", "greetings.bye")

nlp.addDocument("en", "i must go", "greetings.bye")

nlp.addDocument("en", "hello", "greetings.hello")

nlp.addDocument("en", "hi", "greetings.hello")

nlp.addDocument("en", "howdy", "greetings.hello")

// Train also the NLG

nlp.addAnswer("en", "greetings.bye", "Till next time")

nlp.addAnswer("en", "greetings.bye", "see you soon!")

nlp.addAnswer("en", "greetings.hello", "Hey there!")

nlp.addAnswer("en", "greetings.hello", "Greetings!")

await nlp.train()

// Test it

const response = await nlp.process("en", "I should go now")

console.log(response)

})()Here we use NLP.js example from their documentation, feel free to create your own intents.

To execute this code in browser you need a simple html file "index.html" with the following content:

<html>

<head>

<title>Speak</title>

<script src='./bundle.js'></script>

<script src='./index.js'></script>

</head>

<body>

</body>

</html>Open the "index.html" file in the browser and look to the console (press F12).Part 3 - The UI

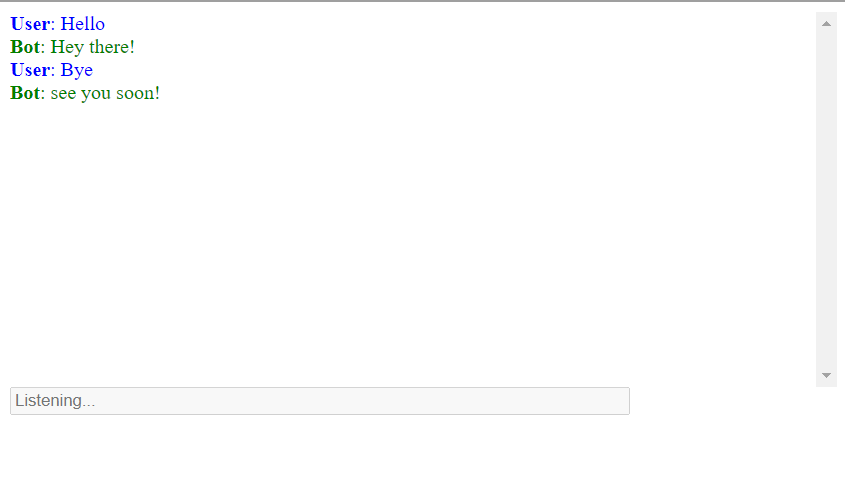

Now you need a simple chat interface to speak to the AI assistant. Add UI elements to the "index.html":

<html>

<head>

<title>Speak</title>

<script src="./bundle.js"></script>

<script src="./index.js"></script>

</head>

<body>

<div id="history" style="height: 300px; overflow-y: scroll;"></div>

<form>

<input id="message" placeholder="Type your message" style="width: 70%;" />

<button id="send" type="submit">Send</button>

</form>

</body>

</html>Then modify the "index.js" to use our form:

const { containerBootstrap, Nlp, LangEn } = window.nlpjs

// shortland function

const el = document.getElementById.bind(document)

// delay initialization until form is created

setTimeout(async () => {

const container = await containerBootstrap()

container.use(Nlp)

container.use(LangEn)

const nlp = container.get("nlp")

nlp.settings.autoSave = false

nlp.addLanguage("en")

// Adds the utterances and intents for the NLP

nlp.addDocument("en", "goodbye for now", "greetings.bye")

nlp.addDocument("en", "bye bye take care", "greetings.bye")

nlp.addDocument("en", "okay see you later", "greetings.bye")

nlp.addDocument("en", "bye for now", "greetings.bye")

nlp.addDocument("en", "i must go", "greetings.bye")

nlp.addDocument("en", "hello", "greetings.hello")

nlp.addDocument("en", "hi", "greetings.hello")

nlp.addDocument("en", "howdy", "greetings.hello")

// Train also the NLG

nlp.addAnswer("en", "greetings.bye", "Till next time")

nlp.addAnswer("en", "greetings.bye", "see you soon!")

nlp.addAnswer("en", "greetings.hello", "Hey there!")

nlp.addAnswer("en", "greetings.hello", "Greetings!")

await nlp.train()

// form submit event

async function onMessage(event) {

if (event) event.preventDefault()

const msg = el("message").value

el("message").value = ""

if (!msg) return

const userElement = document.createElement("div")

userElement.innerHTML = "<b>User</b>: " + msg

userElement.style.color = "blue"

el("history").appendChild(userElement)

const response = await nlp.process("en", msg)

const answer = response.answer || "I don't understand."

const botElement = document.createElement("div")

botElement.innerHTML = "<b>Bot</b>: " + answer

botElement.style.color = "green"

el("history").appendChild(botElement)

}

// Add form submit event listener

document.forms[0].onsubmit = onMessage

})Open the "index.html" in the browser and test our chat interface.

Part 4 - The voice

To make voice interface for our bot, we use browser SpeechRecognition and speechSynthesis APIs.

Currently, only Google Chrome browser has the full support of both simultaneously. Modify the "index.js" with the following:

const { containerBootstrap, Nlp, LangEn } = window.nlpjs

// shortland function

const el = document.getElementById.bind(document)

function capitalize(string) {

return string.charAt(0).toUpperCase() + string.slice(1)

}

// initialize speech recognition

const SpeechRecognition =

window.SpeechRecognition || window.webkitSpeechRecognition

const recognition = SpeechRecognition ? new SpeechRecognition() : null

// how long to listen before sending the message

const MESSAGE_DELAY = 3000

// timer variable

let timer = null

let recognizing = false

// delay initialization until form is created

setTimeout(async () => {

const container = await containerBootstrap()

container.use(Nlp)

container.use(LangEn)

const nlp = container.get("nlp")

nlp.settings.autoSave = false

nlp.addLanguage("en")

// Adds the utterances and intents for the NLP

nlp.addDocument("en", "goodbye for now", "greetings.bye")

nlp.addDocument("en", "bye bye take care", "greetings.bye")

nlp.addDocument("en", "okay see you later", "greetings.bye")

nlp.addDocument("en", "bye for now", "greetings.bye")

nlp.addDocument("en", "i must go", "greetings.bye")

nlp.addDocument("en", "hello", "greetings.hello")

nlp.addDocument("en", "hi", "greetings.hello")

nlp.addDocument("en", "howdy", "greetings.hello")

// Train also the NLG

nlp.addAnswer("en", "greetings.bye", "Till next time")

nlp.addAnswer("en", "greetings.bye", "see you soon!")

nlp.addAnswer("en", "greetings.hello", "Hey there!")

nlp.addAnswer("en", "greetings.hello", "Greetings!")

await nlp.train()

// initialize speech generation

let synthVoice = null

if ("speechSynthesis" in window && recognition) {

// wait until voices are ready

window.speechSynthesis.onvoiceschanged = () => {

synthVoice = text => {

clearTimeout(timer)

const synth = window.speechSynthesis

const utterance = new SpeechSynthesisUtterance()

// select some english voice

const voice = synth.getVoices().find(voice => {

return voice.localService && voice.lang === "en-US"

})

if (voice) utterance.voice = voice

utterance.text = text

synth.speak(utterance)

timer = setTimeout(onMessage, MESSAGE_DELAY)

}

}

}

// form submit event

async function onMessage(event) {

if (event) event.preventDefault()

const msg = el("message").value

el("message").value = ""

if (!msg) return

const userElement = document.createElement("div")

userElement.innerHTML = "<b>User</b>: " + msg

userElement.style.color = "blue"

el("history").appendChild(userElement)

const response = await nlp.process("en", msg)

const answer = response.answer || "I don't understand."

const botElement = document.createElement("div")

botElement.innerHTML = "<b>Bot</b>: " + answer

botElement.style.color = "green"

el("history").appendChild(botElement)

if (synthVoice && recognizing) synthVoice(answer)

}

// Add form submit event listener

document.forms[0].onsubmit = onMessage

// if speech recognition is supported then add elements for it

if (recognition) {

// add speak button

const speakElement = document.createElement("button")

speakElement.id = "speak"

speakElement.innerText = "Speak!"

speakElement.onclick = e => {

e.preventDefault()

recognition.start()

}

document.forms[0].appendChild(speakElement)

// add "interim" element

const interimElement = document.createElement("div")

interimElement.id = "interim"

interimElement.style.color = "grey"

document.body.appendChild(interimElement)

// configure continuous speech recognition

recognition.continuous = true

recognition.interimResults = true

recognition.lang = "en-US"

// switch to listening mode

recognition.onstart = function () {

recognizing = true

el("speak").style.display = "none"

el("send").style.display = "none"

el("message").disabled = true

el("message").placeholder = "Listening..."

}

recognition.onerror = function (event) {

alert(event.error)

}

// switch back to type mode

recognition.onend = function () {

el("speak").style.display = "inline-block"

el("send").style.display = "inline-block"

el("message").disabled = false

el("message").placeholder = "Type your message"

el("interim").innerText = ""

clearTimeout(timer)

onMessage()

recognizing = false

}

// speech recognition result event;

// append recognized text to the form input and display interim results

recognition.onresult = event => {

clearTimeout(timer)

timer = setTimeout(onMessage, MESSAGE_DELAY)

let transcript = ""

for (var i = event.resultIndex; i < event.results.length; ++i) {

if (event.results[i].isFinal) {

let msg = event.results[i][0].transcript

if (!el("message").value) msg = capitalize(msg.trimLeft())

el("message").value += msg

} else {

transcript += event.results[i][0].transcript

}

}

el("interim").innerText = transcript

}

}

})Open the "index.html" in the browser. Press the "Speak" button, allow microphone usage, and talk to our new voice assistant.

Getting Started

Modern browsers have got all the features to recognize and generate the speech already. Together with JavaScript or WebAssembly based NLP frameworks, building custom portable voice assistants, working entirely in the browser, or connected with some API is completely doable.

Our simplest standalone voice assistant is written in just about 160 lines of code, thanks to NLP.js text processing features and Google Chrome speech recognition and synthesis.

There also exists a bunch of other usable NLP libraries and SaaS solutions with their own unique features, so you have a choice to select the one which fits your needs the best. You can download our tutorial bundle in one HTML file here.

Intersog builds custom solutions in AI, IoT and web software. We are a perfect partner when embarking on new technologies. Web-based voice assistants are becoming more and more common place and great place to begin amplifying your customer engagement and service.