The new Apache Spark has raised a buzz in the world of Big Data. It promises to be more than 100 times faster than Hadoop MapReduce with more comfortable APIs, which begs the question: could this be the start of the end for MapReduce?

source: dezyre.com

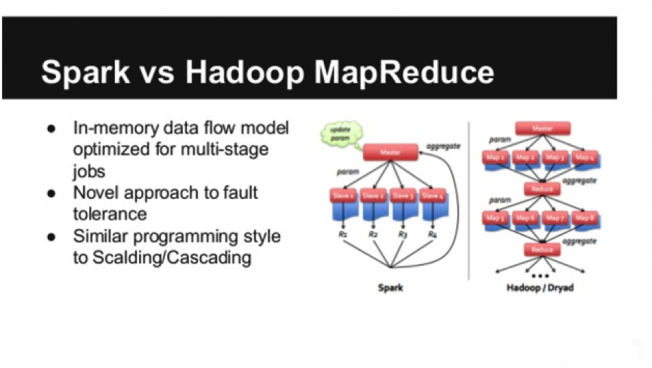

How is it possible for an open-source framework such as Apache Spark to process data that fast? The reason is that Spark runs in-memory within the cluster; as such, it doesn’t depend on the two-stage Hadoop MapReduce paradigm. Therefore, repeat access to data is much faster.

Spark can be run on Hadoop YARN or as a standalone, directly reading data from HDFS. Currently, it has already been adopted by companies like Intel, Baidu, Groupon, Yahoo and Trend Micro.

This article compares the two platforms to really see whether Spark is on its way to wash out Hadoop MapReduce.

Performance

Hadoop MapReduce reverts back to disk following a map and/or reduce action, while Spark processes data in-memory. Performance-wise, as a result, Apache Spark outperforms Hadoop MapReduce.

On the flip side, spark requires a higher memory allocation, since it loads processes into memory and caches them there for a while, just like standard databases. Where Spark is running on YARN alongside other resource-intensive services, or where the data is too big for the memory, the result is significant performance degradation for Apache Spark. However, MapReduce kills processes on job completion, therefore can be seamlessly run alongside resource-intensive services without majorly affecting performance.

Considering iterative computations on the same block of data, Spark maintains the upper hand. When considering single-pass ETL tasks like data integration or transformation, MapReduce is the better option.

The verdict

Apache Spark performs better where there’s enough memory to fit all data e.g. using dedicated clusters. Hadoop MapReduce was created for use when memory space is limited, or when you need it to run alongside other services.

Ease of Use

Apache Spark comes with user friendly APIs for Scala, Python and Java as well as Spark SQL for those who lean towards SQL. With the simple building blocks in Spark, user-defined functions are easy to write. In addition, there’s an interactive mode where commands can be run with instantaneous feedback.

Hadoop’s MapReduce is Java-based, and is known for complicated programming. Including Pig makes it easier, though one must take time to master the syntax. Adding Hive brings in SQL compatibility. There are Hadoop tools available out there that can run MapReduce tasks without software programming or deployment.

MapReduce has no interactive mode. However, Hive comes with a command line interface and full interactive querying should be possible with projects such as Presto, Tez and Impala.

In terms of installation and maintenance, Spark doesn’t require Hadoop. However, both platforms are included in certain distributions e.g. Cloudera’s CDH 5 and Hortonworks’ HDP 2.2.

The verdict

Spark makes for easier programming and comes with the interactive mode. While MapReduce is more difficult, it includes many tools to make the job easier.

Cost

Hadoop MapReduce and Spark are both open-source platforms, but there are costs to be incurred in staffing and machinery. Both platforms can run on the cloud and use commodity servers, and their hardware requirements are almost similar.

Spark cluster should have memories that are equivalent to the amount of data to be processed or larger, which is key for optimal performance. Therefore, if you intend to carry out for Big Data processing, Hadoop is a much cheaper option since the cost of disk space is lower than that of memory space.

Conversely, given Spark standards, it should make for a cheaper option considering that it’ll take less hardware to perform an equivalent amount of tasks in less time, particularly for cloud deployments where computing power is paid for with each use.

With regard to staffing, there are very few MapReduce experts, even though Hadoop has been around for a decade now. Spark has only been in the market for five years, but has a not such a steep learning curve compared to Hadoop. However, the Hadoop MapReduce experts currently outnumber those of Spark.

What’s more, given the prevalence of Hadoop-as-a-Service packages, remote DBA support and other Hadoop based services; the costs of hardware and in-house staffing become non-issues. However, Spark, being relatively new, doesn’t enjoy the same Spark-as-a-Service options.

The verdict

Spark makes for better cost effectiveness owing to its benchmarks, but staffing will be more expensive. Hadoop MapReduce may be cheaper because it has more experts available, as well as service offering packages.

Compatibility

Spark can run standalone, in the Cloud, on Mesos or on Hadoop YARN. It can support data sources deploying Hadoop InputFormat, and hence can be integrated with all file formats and data sources that Hadoop supports. Their website also states that Spark can work with business intelligence tools through ODBC and JDBC. Integration with Hive and Pig is currently being worked on.

The verdict

Spark can therefore work and integrate with all data sources and types that are supported for Hadoop MapReduce.

Data Processing

Spark does more than just simply process data; it can use existing machine-learning libraries and process graphs. Given its high performance potential, Spark is capable of both real-time and batch processing. This offers an unmatched opportunity for an organization to have a single platform supporting everything rather than splitting tasks between different platforms that would need skilled manpower for maintenance and management.

MapReduce is the best tool for batch processing. Real-time processing would require an additional platform such as Impala or Storm, with Giraph for graph process. MapReduce previously carried out machine learning on Apache Mahout, but this was abandoned for h20 and Spark.

The verdict

Spark is your all-in-one data processing solution, but Hadoop MapReduce comes out further ahead for batch processing.

Conclusion

While Spark is the beautiful new toy in the Big Data field, there are many use cases where Hadoop MapReduce is ideal.

In-memory data processing in Spark makes it high performance and cost-effective, considering that it is compatible with all file types and formats supported by Hadoop. APIs in multiple languages as well as the faster learning curve make it a friendlier option, as do the inbuilt machine-learning and graph processing capabilities.

On the other hand, MapReduce is more mature and ideal for batch processing. There are instances where it is more cost effective because of staff availability. Its product ecosystem is also currently larger, with many supporting tools, cloud services and projects.