When it comes to application development, security is paramount from the planning stages until the product is released. But what happens if security teams are unaware of components in the software delivery pipeline? What if they aren’t even aware of the unique challenges that are associated with it?

This is the current predicament when it comes to software containers. If you haven’t already read Frederic Lardinois’ great article “WTF is a container,” here’s the breakdown.

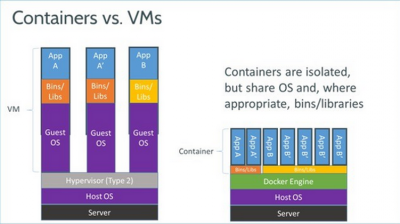

Software containers eliminate environment-related configuration challenges. Further, because they are significantly lighter than virtual machines (VMs), your infrastructure requirements will also be significantly reduced.

So basically, you’re packing all your dependencies and code into a container that can run anywhere. Since they are also quite small, you can pack multiple containers onto a single computer.

What’s the Big Deal about Containers?

Containers are still in their infancy, but it’s a big deal as they are extremely cost-effective. Before containers made an appearance on the scene, VMs were the popular technology because they allowed a single server to run loads of different applications that were also isolated from each other.

image source: ZDNet

VMs also made cloud applications (first generation) and even web hosting services possible, but they were not so efficient because they work by packaging the code and the operating system (OS) together.

Although the OS on the VM believes that it’s operating on its own server, in reality it was sharing it with a whole bunch of other VMs (that ran their own OS and didn’t communicate with others).

As a result, each VM functions as a guest that believes that it's the only one on the server. They, in turn, run on emulated servers which not only slows things down but also creates a lot of overhead expenses. On the plus side, you have the option of running multiples operating systems on the same server.

Containers became a highly efficient solution as it was able to get software to run reliably even when the computing environment changed from one to another. For example, this can be a developer’s computer test environment to the staging environment. The same rules also apply when it moves from a physical machine to a data center environment and onto the cloud.

Although they have been around for years (and architected into Linux in 2007), usability issues prevented them from making a significant impact until Docker came along with its enterprise-ready solution.

Fast forward close to four years later and everyone from Google to JP Morgan Chase to Uber are onboard pursuing a container-based development strategy. The main player in the game is Docker, but there are also alternatives like Kubernetes, CoreOS, and rkt. Further, all these companies are also working towards the same goal of plugging any potential weaknesses that come up within the container engines themselves.

However, the main security threat comes from what makes containers great. Containers are light because of the shared host kernel and this opens it up for potential security threats.

1. Avoid Using Images from Untrusted Repositories

As a rule, it’s always better to avoid public repositories from unofficial sources. Although it can be tempting to speed up the software development cycle by pulling an image from a random registry, the possible vulnerabilities that you can be exposed to are just not worth it.

It’s better for developers to either build their own images that are the building blocks of containers or download them from trusted sources like the Docker Hub. It’s critical that images are signed and originate from trusted sources to ensure that container security best practices are maintained.

But this alone won’t be enough as the code still needs to be vetted and validated. Further, if you don’t follow container best practices, you run the risk of delays or even projects being shelved (and that’s a lot of money wasted).

2. Make Attacks Difficult with -U Flag and by Removing SUID flags

When you’re working with a platform like Docker, you can significantly minimize the security risk by doing the following:

- Always start containers with -u flag as these will run as an ordinary user instead of root

- Remember to remove SUID flags from the images to reduce the risk of privilege escalation attacks

3. Use Automation Instead of SSH

It’s better to forget about installing an SSH daemon inside containers as it will make it vulnerable to human and bot attacks.

Instead, it’s better to automate routine tasks or find a third-party solution to enhance security.

4. Make the Host a Fortress

Containers are popular because they can isolate an application and its dependencies by self-containing the unit that can run from anywhere in the world. Further, limit the resources that each container can use by configuring control groups to enhance protection against container-based DDoS attacks (use namespaces to enable security isolations between containers).

But this is not a solid approach as there can be misconfigurations that will create vulnerabilities. Developers need to follow a proactive security approach by embedding security protocols within operational processes.

Following this philosophy will enable applications to be significantly secure. This, in turn, will align with the goals of both security and DevOps teams. As security teams can sometimes be unaware of container operations and processes, it will benefit everyone to involve them from the beginning on the build cycle.

As containers already have automation in place, it makes it easy to make security a priority right from the planning stages. Although the idea of automating security into operational workflows may be new to some developers, it’s not something new to containers where all aspects of operations can be automated.