In this article, we'll learn two basic methods of implementing Question Answering systems using Python development.

A Question Answering (QA) system aims at satisfying users who are looking to answer a specific question in natural language. It works like search engines but with different result representations: a search engine returns a list of links to answering resources, while a QA system gives a direct answer to a question.

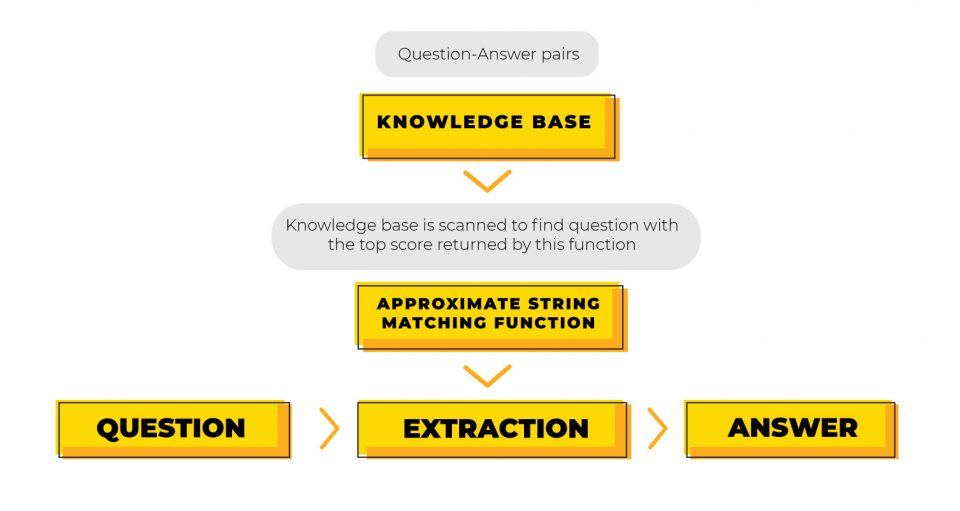

The information-retrieval process in QA systems is divided into three stages: question processing, ranking, and answer extraction. Question processing and ranking can be performed using algorithmic functions or machine learning.

QA systems with the approximate match function are as simple as the following.

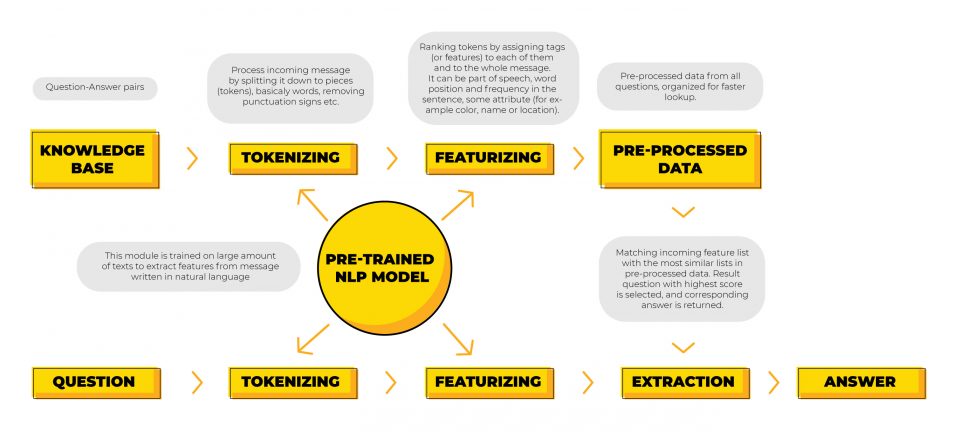

More complex QA systems use NLP techniques to understand what people talk and write about. NLP, or Natural Language Processing, is the ability of a computer program to understand human language as it is spoken or written.

Basic QA system pipeline

The pipeline of a basic QA system with a pre-trained NLP model includes two stages - preparation of data and processing as follows below:

Prerequisites

To run these examples, you need Python 3. Also, install Jupyter Lab and a few Python modules.

pip install jupyterlab

pip install python-Levenshtein

pip install bert-serving-server bert-serving-clientData

For demo purposes, we use a small set of question-answer pairs. To build a high-quality QA system, you should use many question samples, specialized data storage, or a database for fast lookup, and, as you'll see at the end, it is a good idea to master training in NLP models.

In our examples, we will use the knowledge base without any modification, but you are free to insert additional question samples to improve answering quality. Let's load our data:

import pandas as pd

data = pd.read_csv('qa.csv')

# this function is used to get printable results

def getResults(questions, fn):

def getResult(q):

answer, score, prediction = fn(q)

return> [q, prediction, answer, score]

return pd.DataFrame(list(map(getResult, questions)), columns=["Q", "Prediction", "A", "Score"])

test_data = [

"What is the population of Egypt?",

"What is the poulation of egypt",

"How long is a leopard's tail?",

"Do you know the length of leopard's tail?",

"When polar bears can be invisible?",

"Can I see arctic animals?",

"some city in Finland"

]

data| # | Question | Answer |

|---|---|---|

| 0 | Who determined the dependence of the boiling o... | Anders Celsius |

| 1 | Are beetles insects? | Yes |

| 2 | Are Canada 's two official languages English a... | yes |

| 3 | What is the population of Egypt? | more than 78 million |

| 4 | What is the biggest city in Finland? | Greater Helsinki |

| 5 | What is the national currency of Liechtenstein? | Swiss franc |

| 6 | Can polar bears be seen under infrared photogr... | Polar bears are nearly invisible under infrare... |

| 7 | When did Tesla demonstrate wireless communicat... | 1893 |

| 8 | What are violins made of? | different types of wood |

| 9 | How long is a leopard's tail? | 60 to 110cm |

The most straightforward QnA system using Python

Below is an example of a very naive QA system where a user's query needs to be equal to or part of some question.

imporе re

def getNaiveAnswer(q):

# regex helps to pass some punctuation signs

row = data.loc[data['Question'].str.contains(re.sub(r"[^\w'\s)]+", "", q),case=False)]

if len(row) > 0:

return row["Answer"].values[0], 1, row["Question"].values[0]

return "Sorry, I didn't get you.", 0, ""

getResults(test_data, getNaiveAnswer)| # | Q | Prediction | A | Score |

|---|---|---|---|---|

| 0 | What is the population of Egypt? | What is the population of Egypt? | more than 78 million | 1 |

| 1 | What is the poulation of egypt | Sorry, I didn't get you. | 0 | |

| 2 | How long is a leopard's tail? | How long is a leopard's tail? | 60 to 110cm | 1 |

| 3 | Do you know the length of leopard's tail? | Sorry, I didn't get you. | 0 | |

| 4 | When polar bears can be invisible? | Sorry, I didn't get you. | 0 | |

| 5 | Can I see arctic animals? | Sorry, I didn't get you. | 0 | |

| 6 | some city in Finland | Sorry, I didn't get you. | 0 |

This system has a notable drawback to not find a match if there some grammar mistakes. Even if we use some string pre-processing of source and query texts, like punctuation symbols removal, lowercasing, etc., the result has poor quality. This way of question matching is very inefficient. Let's improve it to become error-prone with approximate string matching.

Approximating QA system

Let's use approximate string matching to make our system admit grammar mistakes and some text differences. In computer science, many methods to do approximate string matching exist. For our demo purposes, we use one of the implementations of fuzzy string searching called Levenshtein distance. The Levenshtein distance between two words is the minimum number of single-character edits (insertions, deletions, or substitutions) required to change one word into another.

Let's implement our system with the Levenshtein Python module. It contains a set of approximate string matching functions; you can try any other Python modules of your choice.

from

Levenshtein import ratio

def getApproximateAnswer(q):

max_score = 0

answer = ""

prediction = ""

for idx, row in data.iterrows():

score = ratio(row["Question"], q)

if score >= 0.9: # I'm sure, stop here

return row["Answer"], score, row["Question"]

elif score > max_score: # I'm unsure, continue

max_score = score

answer = row["Answer"]

prediction = row["Question"]

if max_score > 0.8:

return answer, max_score, prediction

return "Sorry, I didn't get you.", max_score, prediction

getResults(test_data, getApproximateAnswer)| # | Q | Prediction | A | Score |

|---|---|---|---|---|

| 0 | What is the population of Egypt? | What is the population of Egypt? | more than 78 million | 1.000000 |

| 1 | What is the poulation of egypt | What is the population of Egypt? | more than 78 million | 0.935484 |

| 2 | How long is a leopard's tail? | How long is a leopard's tail? | 60 to 110cm | 1.000000 |

| 3 | Do you know the length of leopard's tail? | How long is a leopard's tail? | Sorry, I didn't get you. | 0.657143 |

| 4 | When polar bears can be invisible? | Can polar bears be seen under infrared photogr... | Sorry, I didn't get you. | 0.517647 |

| 5 | Can I see arctic animals? | What is the biggest city in Finland? | Sorry, I didn't get you. | 0.426230 |

| 6 | some city in Finland | What is the biggest city in Finland? | Sorry, I didn't get you. | 0.642857 |

The second question with two grammar mistakes is answered, getting a score below 1.0 but acceptably high. For now, our system is better, it can do spell-checking, but they still have trouble with questions written in the native language. Let's try to adjust the max_ratio coefficient of our function to be more tolerant.

from Levenshtein import ratio

def getApproximateAnswer2(q):

max_score = 0

answer = ""

prediction = ""

for idx, row in data.iterrows():

score = ratio(row["Question"], q)

if score >= 0.9: # I'm sure, stop here

return row["Answer"], score, row["Question"]

elif score > max_score: # I'm unsure, continue

max_score = score

answer = row["Answer"]

prediction = row["Question"]

if max_score > 0.3: # treshold is lowered

return answer, max_score, prediction

return "Sorry, I didn't get you.", max_score, prediction

getResults(test_data, getApproximateAnswer2)| # | Q | Prediction | A | Score |

|---|---|---|---|---|

| 0 | What is the population of Egypt? | What is the population of Egypt? | more than 78 million | 1.000000 |

| 1 | What is the poulation of egypt | What is the population of Egypt? | more than 78 million | 0.935484 |

| 2 | How long is a leopard's tail? | How long is a leopard's tail? | 60 to 110cm | 1.000000 |

| 3 | Do you know the length of leopard's tail? | How long is a leopard's tail? | 60 to 110cm | 0.657143 |

| 4 | When polar bears can be invisible? | Can polar bears be seen under infrared photogr... | Polar bears are nearly invisible under infrare... | 0.517647 |

| 5 | Can I see arctic animals? | What is the biggest city in Finland? | Greater Helsinki | 0.426230 |

| 6 | some city in Finland | What is the biggest city in Finland? | Greater Helsinki | 0.642857 |

Let's examine the results. For now, our system has more answers. We've got responses for questions written with different words. But look at result #5. It looks like a false positive, and the answers don't match our questions semantically. We need some balanced ratio depending on the data set, choosing between language understanding and correctness. This Python code is straightforward, but it is impractical on large volumes because of the overall iteration dataset.

For now, you have an idea about how to improve answering quality. Maybe you could do that by adjusting coefficients, inserting more question samples, using a set of functions simultaneously, splitting the sentence into words, and doing matching on word level too, and more.

But in fact, you shouldn't do that. There are advanced libraries developed by Google and Facebook, which already deal with the subject matter, and they are doing it pretty well. Let's go to the next level with NLP models.

NLP-powered / BERT QA system

We use BERT-as-service to implement our following QA function. BERT or Bidirectional Encoder Representations from Transformers is a new method of pre-training language representations developed by Google. Bert-as-service uses BERT as a sentence encoder, allowing you to map sentences into fixed-length representations in a few lines of Python code.

Installation

Install the server and client via pip (consult the documentation for details):

pip install bert-serving-server bert-serving-client

Download a Pre-trained BERT Model. We use BERT-Base Cased, but you can try another model that fits better. Download and unpack the archive.

Start service, pointing model_dir to the folder with your downloaded model. Also, you need to set the maximum question sentence length if the default value of 25 doesn't fit your texts:

bert-serving-start -model_dir /tmp/cased_L-12_H-768_A-12/ -num_worker=4 -max_seq_len=64

Ranking (or pre-processing)

Before we use the service, we need to encode our knowledge base to BERT format.

from bert_serving.client import BertClient

import numpy as np

def encode_questions():

bc = BertClient()

questions = data["Question"].values.tolist()

print("Questions count", len(questions))

print("Start to calculate encoder....")

questions_encoder = bc.encode(questions)

np.save("questions", questions_encoder)

questions_encoder_len = np.sqrt(

np.sum(questions_encoder * questions_encoder, axis=1)

)

np.save("questions_len", questions_encoder_len)

print("Encoder ready")

encode_questions()Questions count 10 Start to calculate encoder.... Encoder ready

Run

from bert_serving.client import BertClient

import numpy as np

class BertAnswer():

def __init__(self):

self.bc = BertClient()

self.q_data = data["Question"].values.tolist()

self.a_data = data["Answer"].values.tolist()

self.questions_encoder = np.load("questions.npy")

self.questions_encoder_len = np.load("questions_len.npy")

def get(self, q):

query_vector = self.bc.encode([q])[0]

score = np.sum((query_vector * self.questions_encoder), axis=1) / (

self.questions_encoder_len * (np.sum(query_vector * query_vector) ** 0.5)

)

top_id = np.argsort(score)[::-1][0]

if float(score[top_id]) > 0.94:

return self.a_data[top_id], score[top_id], self.q_data[top_id]

return "Sorry, I didn't get you.", score[top_id], self.q_data[top_id]

bm = BertAnswer()

def getBertAnswer(q):

return bm.get(q)

getResults(test_data, getBertAnswer)| # | Q | Prediction | A | Score |

|---|---|---|---|---|

| 0 | What is the population of Egypt? | What is the population of Egypt? | more than 78 million | 1.000000 |

| 1 | What is the poulation of egypt | What is the population of Egypt? | more than 78 million | 0.967848 |

| 2 | How long is a leopard's tail? | How long is a leopard's tail? | 60 to 110cm | 1.000000 |

| 3 | Do you know the length of leopard's tail? | How long is a leopard's tail? | 60 to 110cm | 0.970769 |

| 4 | When polar bears can be invisible? | Can polar bears be seen under infrared photogr... | Polar bears are nearly invisible under infrare... | 0.975287 |

| 5 | Can I see arctic animals? | Can polar bears be seen under infrared photogr... | Polar bears are nearly invisible under infrare... | 0.964607 |

| 6 | some city in Finland | What is the biggest city in Finland? | Sorry, I didn't get you. | 0.932894 |

Our function correctly answered most questions. But we have unanswered question #6. We can play with the score threshold and add additional question samples to improve understanding in our case, but in general, we need a better way - to perform fine-tuning of the model.

Let's try the fine-tuned BERT model in the next step.

Fine-tune pre-trained BERT QA systems

Pre-trained BERT models often show pretty good results on many tasks. However, to release the true power of BERT, fine-tuning domain-specific data is necessary.

We follow the instruction in "Sentence (and sentence-pair) classification tasks." Clone the repository:

git clone https://github.com/google-research/bert.gitDownload this script and run it to download "GLUE data":

python download_glue_data.pyThen run fine-tuning process:

export BERT_BASE_DIR=/tmp/cased_L-12_H-768_A-12

export GLUE_DIR=/tmp/glue_data

python run_classifier.py \

--task_name=MRPC \

--do_train=true \

--do_eval=true \

--data_dir=$GLUE_DIR/MRPC \

--vocab_file=$BERT_BASE_DIR/vocab.txt \

--bert_config_file=$BERT_BASE_DIR/bert_config.json \

--init_checkpoint=$BERT_BASE_DIR/bert_model.ckpt \

--max_seq_length=128 \

--train_batch_size=32 \

--learning_rate=2e-5 \

--num_train_epochs=3.0 \

--output_dir=/tmp/mrpc_output/ \

--do_lower_case=FalseThe fine-tuned model is stored at /tmp/mrpc_output/. Look inside it and find our fine-tuned model checkpoint, which is named like model.ckpt-343. Remember to use it as a parameter to BERT-server.

Now start BertServer by putting three pieces together:

bert-serving-start -model_dir /tmp/cased_L-12_H-768_A-12/ -num_worker=4 -max_seq_len=64 \

-tuned_model_dir=/tmp/mrpc_output/ -ckpt_name=model.ckpt-343After the server starts, you should find this line in the log:

I:GRAPHOPT:checkpoint (override by the fine-tuned model): /tmp/mrpc_output/model.ckpt-343

...

I:VENTILATOR:all set, ready to serve request!Now let's repeat pre-processing and run steps:

from bert_serving.client import BertClient

import numpy as np

def encode_questions2():

bc = BertClient()

questions = data["Question"].values.tolist()

print("Questions count", len(questions))

print("Start to calculate encoder....")

questions_encoder = bc.encode(questions)

np.save("questions2", questions_encoder)

questions_encoder_len = np.sqrt(

np.sum(questions_encoder * questions_encoder, axis=1)

)

np.save("questions_len2", questions_encoder_len)

print("Encoder ready")

encode_questions2()Questions count 10 Start to calculate encoder.... Encoder ready

from bert_serving.client import BertClient

import numpy as np

class TunedBertAnswer():

def __init__(self):

self.bc = BertClient()

self.q_data = data["Question"].values.tolist()

self.a_data = data["Answer"].values.tolist()

self.questions_encoder = np.load("questions2.npy")

self.questions_encoder_len = np.load("questions_len2.npy")

def get(self, q):

query_vector = self.bc.encode([q])[0]

score = np.sum((query_vector * self.questions_encoder), axis=1) / (

self.questions_encoder_len * (np.sum(query_vector * query_vector) ** 0.5)

)

top_id = np.argsort(score)[::-1][0]

if float(score[top_id]) > 0.94:

return self.a_data[top_id], score[top_id], self.q_data[top_id]

return "Sorry, I didn't get you.", score[top_id], self.q_data[top_id]

bm2 = TunedBertAnswer()

def getTunedBertAnswer(q):

return bm2.get(q)

getResults(test_data, getTunedBertAnswer)| Q | Prediction | A | Score | |

|---|---|---|---|---|

| 0 | What is the population of Egypt? | What is the population of Egypt? | more than 78 million | 1.000000 |

| 1 | What is the poulation of egypt | What is the population of Egypt? | more than 78 million | 0.968978 |

| 2 | How long is a leopard's tail? | How long is a leopard's tail? | 60 to 110cm | 1.000000 |

| 3 | Do you know the length of leopard's tail? | How long is a leopard's tail? | 60 to 110cm | 0.967964 |

| 4 | When polar bears can be invisible? | Can polar bears be seen under infrared photogr... | Polar bears are nearly invisible under infrare... | 0.960934 |

| 5 | Can I see arctic animals? | Can polar bears be seen under infrared photogr... | Polar bears are nearly invisible under infrare... | 0.964808 |

| 6 | some city in Finland | What is the biggest city in Finland? | Greater Helsinki | 0.946184 |

We've got all the questions answered for now. Please note that this is just an example. On other questions bases, it is possible to get better or worse results, so we need to examine accessible technologies in each case.

Conclusion

The quantity and content of examples have a significant impact on the pre-trained model when the score is near the threshold. Try to change some test questions, and you'll get a different result; even some correct answers may go. So the pre-trained model can handle many input variants, but it doesn't solve all possible cases.

To make a sound QnA system, we need many question examples, trying to raise the prediction score to 1 for the possible input questions. Training on own domain-specific data instead of general may give a better prediction result too.

In the examples above, we demonstrated how the quality of a QA system is influenced by AI technology, from a single function to a pre-trained NLP model. We built a basic Question Answering system with natural language understanding in a few lines of Python code. Such systems can be used standalone to serve Frequently Asked Questions search, documentation search, etc.

QA system can be used to improve the quality of chatbots significantly. A large number of questions and answers can introduce some difficulty in training, but the QA system can serve this task quite well.