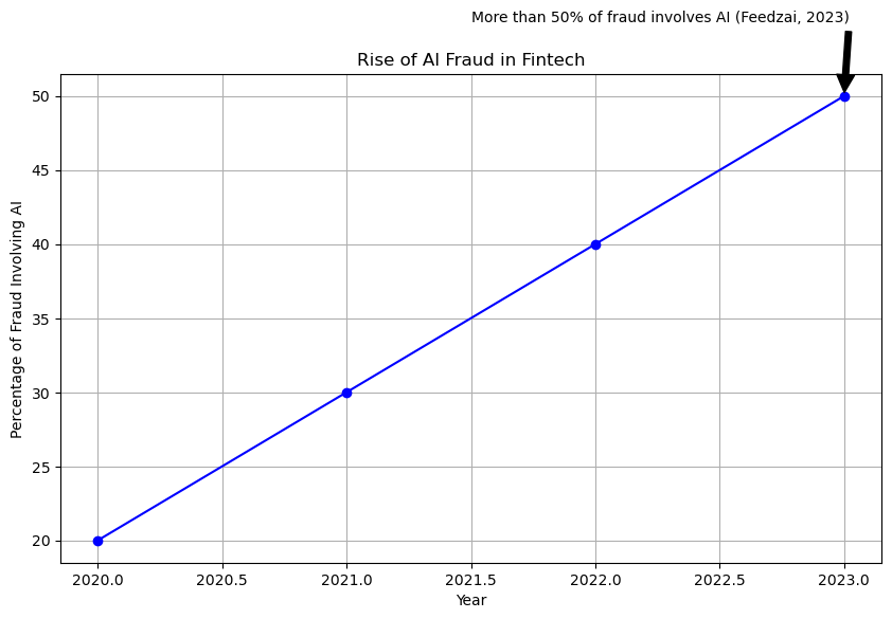

According to Feedzai, a global leader in financial crime prevention, more than 50% of fraud involves the use of AI, including hyper-realistic deepfakes and synthetic identities.

With fintech's continuous rapid expansion, the integration of AI for both innovation and prevention of malicious activities has fueled a corresponding surge in AI-driven fraud, creating a critical need for advanced security measures.

AI Scams Surge

AI-driven scams have surged across the fintech sector in 2025, with criminals leveraging generative AI to create highly convincing fraud schemes. Scammers use AI to produce hyper-realistic deepfake videos and voice clones, impersonating financial experts and officials.

Here are just a few of recent stories:

- The writer of this article was contacted by a deep fake - someone on Instagram imitating her cousin’s voice. “She” received a voice message stating that it would be a good idea to invest in bitcoin via her account - which was hacked.

In 2024, a Deepfake of Elon Musk scammed an 82-year old retiree out of $690,000 USD over the course of several weeks. He was absolutely convinced that the videos and audio were actually Elon Musk.

- “I mean, the picture of him — it was him,” Steve Beauchamp told The New York Times when describing the deepfake video he had seen of Elon Musk.

Recently, a video conference was held at Arup Global. The conference call involved deepfakes impersonating the company’s CFO and other employees - to the point a member of staff was duped into making 15 transactions totaling HK $200M (almost USD $26M) to Hong Kong bank accounts.

The above stories aren’t surprising if you consider that the use of AI to generate audio and visual cloning increased by 118% in 2024!

What can we do to fight back?

1. Use AI Powered-Fraud Detection

AI analyzes vast amounts of transaction data in real-time by recognizing patterns, detecting anomalies, and assigning risk scores to transactions. It uses machine learning models to distinguish between legitimate and fraudulent activities, continuously improving through feedback loops. AI also employs predictive analytics and natural language processing to forecast potential fraud and analyze unstructured data, triggering automated responses to prevent fraud before it causes significant damage.

AI is significantly more efficient and accurate in preventing fraud compared to traditional methods. It can analyze vast amounts of data in real-time, detect anomalies instantly, and continuously learn from new fraud patterns. This allows AI to identify and respond to fraudulent activities much faster and with greater precision than manual processes, reducing the risk of financial losses and enhancing security.

According to a case study done by Best Practice AI, American Express uses AI to analyze over $1 trillion in transactions and annually to minimize fraud.

2. Invest in recruiting more personnel with AI expertise.

Knowledge and application of AI-based technology is critical if you are serious about preventing fraud for your customers, peers and your own bottom line.

As AI capabilities advance, so do the tools and tactics used by cybercriminals. From deepfake impersonations of financial executives to hyper-personalized phishing emails and synthetic identity creation, fintechs are facing a new generation of fraud—smarter, faster, and harder to detect. The surge in AI-driven scams isn’t slowing down—and neither can the industry’s response.

To stay ahead, financial institutions must adopt equally intelligent defenses. Modern AI-powered fraud detection can analyze billions of transactions in milliseconds, identify subtle patterns, and adapt in real time—far beyond the capabilities of traditional systems.

But tools alone aren’t enough. Combating this new era of fraud also means investing in AI expertise, strengthening internal processes, and partnering with experienced technology firms.

At Intersog, we help fintech leaders build secure, high-performance systems equipped to outpace today’s most advanced AI fraud tactics. Whether you’re modernizing your fraud detection stack or exploring AI automation, our team is here to help.

Now is the time to act. Integrate smarter AI solutions—and turn the tide against AI-powered fraud.

References:

Convera Warns of Sophisticated AI Scam Surge in 2025 | FinTech Magazine

Outsourcing in 2025: Building Flexible, High-Performing Teams Across Borders - Intersog

How Amex Uses AI To Automate 8 Billion Risk Decisions (And Achieve 50% Less Fraud)

The Rise of AI-Powered Bots in Payment Fraud & How FinTechs Can Protect Themselves

Top 5 Cases of AI Deepfake Fraud From 2024 Exposed | Blog | Incode